The data center monitoring system is a complex system combining software and hardware. This article will introduce in detail the composition, technology, and application scenarios of the core software modules of the monitoring management system, providing necessary reference for system design.

The monitoring management system consists of four major systems: a monitoring system, an operation management system, a master control center system and a basic service system. The following is a detailed description of the core modules in the four major systems.

First, the monitoring system

The monitoring system consists of two subsystems: the information acquisition subsystem and the information processing subsystem.

1, information acquisition subsystem

In order to achieve modular design, distributed deployment, and improve the stability of the monitoring and management system, the information collection subsystem has been basically hardwareized, that is, a hardware device or hardware module has replaced the traditional software-only method to achieve information collection.

One of the main functions of the acquisition module is to provide various forms of interfaces for accessing various monitoring and management objects. The second is to implement protocol analysis for various acquisition information. Third is to upload the parsed information to the processing unit in a unified format.

2, information processing subsystem

The information processing subsystem is the core subsystem that completes the monitoring function in the monitoring and management system. To process, compute, and store large-scale data in real time, flexibly and accurately, several key modules need to be designed: complex event analysis and processing module and regulation control module.

(1) Complex event analysis and processing module

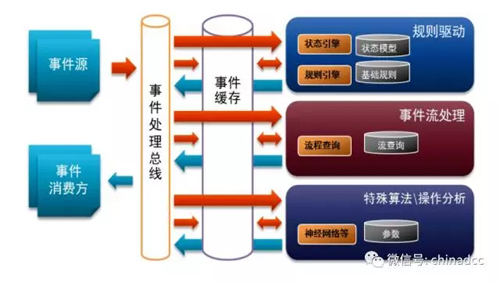

The Complex Event Processing (CEP) module first captures various basic events and then analyzes and collates them to find out more meaningful events (composite events). The analysis and collation of events and the finding of complex incidents are the core and the most difficult part of CEP. The working principle of the complex event analysis and processing module is shown in Figure 1. Real-time data is used as an event source to access the event processing bus. The CEP engine processes these real-time data and cached historical data through the specified rules, and provides meaningful events through the event processing bus. Give the event to the consumer.

Fig.1 The working principle of complex event analysis and processing module

A typical application example is that when a power failure occurs in a data center, a large number of device alarms will be triggered. After analysis by the complex event analysis and processing module, the real cause of the alarm can be analyzed from the event tide, and all device alarms can be combined into one. Power failure alarm.

The complex event analysis and processing module needs to handle the events in the sea and the processing pressure is high. The complex event analysis and processing technology is different from the traditional database data processing. The data stream generated in real time drives the event processing logic and completes all calculations in memory. The performance is improved by an order of magnitude and meets the real-time processing requirements. The event matching rule of the complex event analysis and processing module is the key to improve the effectiveness of the processing. In use, when the logical relationship of the monitored object changes, the event matching rule must be maintained to ensure the correctness of the processing.

(2) Adjustment and Control Module

The master control center system adopts the principle of monitoring and control only for devices that affect the security of user service systems. For non-core services and systems, such as environmental monitoring equipment (such as: new fans, lighting), security systems (such as: Closed-circuit video monitoring systems, access control and attendance systems, etc. can receive control inputs. This feature can be used to fine-tune and intelligently manage data centers through control and control modules.

The adjustment and control module works in two ways: one is manual adjustment and control, and the other is automatic adjustment and control.

It is relatively simple to manually adjust and control, and it is up to the person to make judgments and decisions, form control instructions, and deliver to the corresponding equipment through the monitoring system to achieve the purpose of regulation and control. At this point, the system's regulation and control rely entirely on personal experience, and the randomness is relatively strong. The common manual adjustment and control methods are remote door opening. According to the room temperature, manually adjust the setting temperature of each air conditioner. Manual adjustment and control not only through the monitoring system to complete, but also through telephone, text messaging, etc., such as the phone can open the door, SMS query key equipment status.

The difference between automatic adjustment and control is that the human experience data is built into the monitoring system. The monitoring system forms adjustment and control logic based on these empirical data. When the data collected by the monitoring system flows into the regulation and control logic unit, the unit forms the expected adjustment and control instructions and delivers it to the corresponding device, thereby realizing unattended self-regulation. One of the most common applications of this technology is linkage control, such as fire fire linkage door opening, door opening linkage video recording, fire fire linkage real-time video playback. With the development of the green data center, this technology is gradually applied to the energy-saving field of the data center. For example, the air-conditioning group control technology is an example of such an idea application.

Second, the operation and management system

1, asset management module

Asset management is part of asset and configuration management. In practice, it is generally based on the definition of the management of data center physical assets including new, incoming, incoming, on-line (into the engine room), off-line (maintenance), and reduction (retirement, loss) of IT assets. The asset management of the facility monitoring and management system is the life cycle management of the physical assets of the data center. Of course, related assets at this time are accompanied by some basic attribute information related to infrastructure management.

2, IT asset management scope and classification

(1) Classification of IT assets

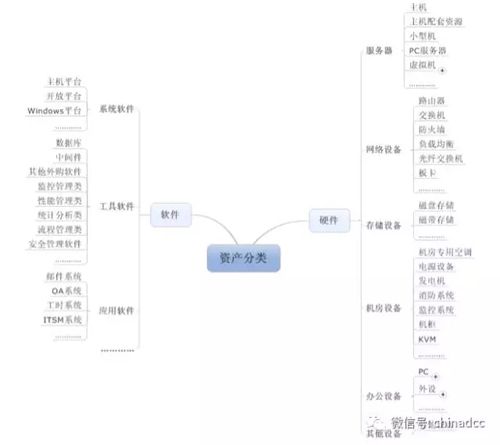

IT assets are divided into two major categories according to their form, namely software and hardware. The software mainly includes system software, tool software, and application software. The hardware mainly includes servers, networks, storage, IT office, and site facilities related equipment. As shown in Figure 2.

Figure 2 Asset Classification

(2) The scope of asset management

The scope of asset management includes assets such as server, network, storage, IT office, site facility related equipment, system software, tool software, and application software (as shown in Figure 2) of the data center, as well as asset and infrastructure operation and maintenance. The management-related attribute information is as follows:

Basic attribute, this attribute is used to record the basic information of the asset or device, including the manufacturer, model, power consumption, height (limited to servers, switches, etc.), weight, purchase time, price, responsible person or department, etc. For different types of equipment, there may also be proprietary attributes to suit its professional characteristics;

User attribute. This attribute is used to record user management related information on the asset or device, including the user name, user classification, permission level, and department, etc. In addition, it can also include the password attribute to support the release of the password in the security management process. Recycling, regular changes and other activities;

Hardware configuration information. This attribute is used to record the hardware configuration related information of the device, including the configured hard disks, network cards, and optical fiber cards and other accessories.

Maintenance information, this attribute is used to record maintenance information of the equipment, including service provider, service scope, service level, service assessment and other information, used for service contract management related activities and function modules.

(3) Main function modules

Based on the scope covered by asset management, asset management needs to achieve the following functions:

The computer room asset management module is used to record, query, and update hardware and software asset information related to operation and maintenance services, including various types of servers, network devices, storage devices, and optical switching devices. It also enables access to asset and equipment rooms through radio frequency technology. Control, rapid positioning, regular inventory and other functions;

Inventory asset management module, used to record and manage inventory assets, equipment, consumables, etc., including outbound storage management, inquiries, inventory and other activities;

The media management module is used to record and manage service-related media information, including labels, storage locations, storage contents, and physical media rapid positioning of media such as optical disks and tapes;

The consumables management module is used to record and manage service-related consumables information and related activities such as network cables and optical fibers. It controls the use, storage, and other activities of consumables. It can also implement functions such as reserve forecasting and consumption analysis to enhance Control of related content;

The basic information management module is used to manage and maintain basic data related to assets, so that users can easily select the system when using the system, improve the ease of use of the system, reduce the workload of the user handwriting and error probability;

The report statistics module is used to count and summarize the changes of assets, the inbound and outbound information, the use and consumption of consumables, and the media transfer and call out information, facilitating management personnel to make decisions and manage the infrastructure;

The system management module includes functions such as user management, authority control, department management, and security control. It is used to control the activities such as authorization, maintenance, and reference of the asset database, facilitating user use and controlling risks.

Management of application, approval and approval procedures for the storage, use, on-line, and retirement of assets.

(4) Asset Management Based on Electronic Identification Numbers

There are many problems with conventional asset management methods:

Asset management manual mode of operation records (some use of one-dimensional bar code, easy to insult, reading difficulties), manual collation and summary, long time, low efficiency, high error rate;

Physical information and management information system information cannot be synchronized. It is impossible to know in real time the current location and status of assets (idle, normal use, maintenance, and scrap);

It is difficult to obtain accurate asset information in a timely manner (often through laborious manual and manual inventory methods to update asset information).

Electronic identification technology is the key to solve this problem, it reads fast, without the need for human intervention to read the data process and other advantages, can quickly identify and inventory of assets, to achieve accurate and rapid grasp of important fixed assets information.

The use of electronic identification to effectively integrate real-time asset monitoring and asset management, so as to achieve real-time synchronization of physical information and system information. In order to achieve "automated life cycle of asset tracking management", provide accurate scientific reference for enterprise investment decisions and reasonable asset allocation. To achieve the “simultaneous management of people, land, time, and materials†in asset management, effectively reduce and control the daily management and production costs, and save the cost of investing a lot of manpower and material resources every year for asset inventory and unnecessary allocation, and avoiding various factors. The loss of assets improves the efficiency of corporate management.

2, capacity management module

Capacity is what the data center can provide. Capacity management aims to subdivide and quantify the processing capabilities or system capacity of various infrastructures and adjust and configure them according to business requirements, so as to achieve rationalization of resource utilization, balance load, and ensure business goals while satisfying major business requirements. Reached.

The capacity management of the infrastructure monitoring and management system mainly focuses on the support capabilities of the data center's infrastructure such as power, cooling, and space, that is, SPC capacity management.

(1) The composition of capacity management

SPC capacity management mainly includes the following parts:

Performance Management, an activity designed to measure, monitor, and adjust the performance of infrastructure or components for optimal performance;

Application Sizing. This activity aims to allocate appropriate resources for applications and devices to meet current and future planned business requirements.

Capacity modeling (Modeling), this activity aims to identify the various factors involved in capacity management and the corresponding weight and other information, and use information technology to establish a corresponding capacity model;

Workload Management. This activity is designed to monitor and measure load changes to obtain real-time capacity usage to guide capacity planning and expansion.

Capacity Planning, which is used to create and plan capacity plans to meet the needs of business development;

Demand Management. This activity aims to make more reasonable use of system support capabilities and related resources by adjusting the load of different systems or the traffic load during peak hours.

(2) Main function modules

Based on the scope defined by SPC capacity management, SPC capacity management needs to implement the following functional modules:

SPC capacity model management, including the creation of capacity models, node information maintenance, parameter settings, etc., in addition also needs to include the dynamic association of monitoring data;

Resource pre-distribution management includes functions such as search, preemption, and cancellation of available resources. When performing resource search and preemption, it is necessary to comprehensively consider the elements defined by the SPC capacity model. For the management needs, it is also necessary to provide pre-requisites. Accounted for audit, equipment on-line, project information management and other functions;

Reports and statistics, including report customization, usage statistics, trend analysis, and optimization suggestions, are mainly used for capacity status analysis and capacity planning.

System management functions, including rights management, user management, historical data management, etc., are used to support the function of capacity management related functions.

3, operation and maintenance management module

Operation and maintenance management is the guarantee for the stable operation of the data center. It is also the main content of the day-to-day management of the data center. It supports data center troubleshooting, routine maintenance, regular inspections, and personnel on duty management. The operation and maintenance management module is the support platform for operation and maintenance management and provides an electronic support platform for the operation and maintenance management activities. The following is a brief description and explanation of the operation and maintenance management module.

(1) The scope of operation and maintenance management

In general, the scope of operations management covers the following:

Failure response and processing, including monitoring, response, dispatch and work order management of various types of equipment failures;

Preventive maintenance management, including regular inspection management, mobile inspection management, daily inspection, etc.

Statistical analysis, including the operational efficiency of the service team, the processing of work orders, workload, etc., statistical analysis of operational status, etc.;

Knowledge sharing and accumulation, including experience in troubleshooting, archiving, sharing, basic system data, and emergency plans.

(2) Main function modules

According to the scope and main activities of the operation and maintenance management, the following functional modules need to be included to match and support the corresponding operation and maintenance activities:

Incident Management (Incident Management) for failure response, analysis, dispatch and subsequent work order management and other activities, supporting and controlling the cooperation of service teams at all levels defined in service management and the flow of ticket transactions, is the operation and maintenance management Dependent on basic functions;

Preventive maintenance management, mainly periodic inspections and mobile inspections, is used for the preventive maintenance of equipment, and the periodical inspection and maintenance are used to repair and maintain equipment at the beginning of an abnormality, thereby preventing major failures from occurring;

Knowledge Management, for the daily fault management needs to provide information sharing platform in order to save and share relevant processing experience and improve the efficiency of collaboration;

Service Level Management (Service Level Management) is used to ensure and quantify the overall quality of service delivery in line with the customer's service contract, including response time, resolution time, resolution rate, etc.

System management, including user management, department management, role management, and rights management, is used to support the implementation of other functions;

Statistical analysis is used for the statistics and analysis of daily work orders to analyze such indicators as processing efficiency, responsiveness, and workload so as to facilitate the optimization and assessment of the operation and maintenance services.

(3) Energy Management Module

With the increase of energy prices, the proportion of data center energy costs in operating costs has risen. Data center energy management has become a hot topic. The concept of “low carbon†has begun to be accepted and valued by data center managers. In order to promote energy conservation and emission reduction in data centers, the Ministry of Industry and Information Technology's "12th Five-Year Plan for Industrial Energy Conservation" proposed that "by 2015, the value of PUE for data centers needs to drop by 8%"; the "Cloud Computing Demonstration Project" organized by the National Development and Reform Commission requires that data center PUE be reduced to 1.5 or less. This all requires energy management.

1) Energy efficiency assessment

The data center energy use efficiency PUE is currently the internationally and domestically recognized energy efficiency parameter, defined as the ratio of the total energy consumption of the data center to the energy consumption of the IT equipment. In the "Data Center Energy Efficiency Evaluation Guide" published on March 16, 2012, the Cloud Computing Development and Policy Forum pointed out that in addition to considering PUE, energy efficiency measures also need to consider CLF (cooling load factor), PLF (power supply factor) and RER ( Such parameters as renewable energy utilization) can more accurately reflect the energy consumption of the data center.

The key to energy management is the monitoring and analysis of energy consumption. Through monitoring, actual and continuous power consumption data are obtained. Based on these data, energy efficiency data of the data center is obtained according to scientific calculation methods.

At present, energy efficiency assessments can be conducted with reference to the requirements of the Data Center Energy Efficiency Evaluation Guidelines.

2) Monitoring and calculation of energy consumption indicators

In order to achieve energy monitoring and analysis, the monitoring and management system should also include an energy monitoring and analysis system. The system monitors the parameters such as the power, current, voltage and other parameters by collecting equipment at the important nodes of the data center power distribution system, analyzes and collects the collected parameters, and presents the energy consumption assessment of each energy efficiency evaluation domain of the data center in the form of reports. As a result, the reference for energy optimization and adjustment. Using this system not only can understand the energy consumption status of the data center, but also can compare the results of energy consumption management horizontally and vertically.

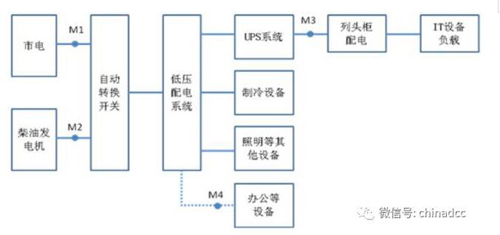

Figure 3 Diagram of common power supply and distribution system

The figure above shows a schematic diagram of a typical power supply and distribution system in a data center. Based on this, some options for monitoring energy consumption are described.

Under normal circumstances, the power of the data center is provided by the mains, so the measurement point of the total power consumption of the data center should be placed before the mains input transformer. When the mains fails, the power generated by the diesel generator serves as a measurement point for the total power consumption of the data center. If it is a multi-purpose engine room building, the calculation of the total power consumption of the data center needs to subtract the measured value of other power consumption such as office.

Strictly speaking, the IT equipment energy consumption index should measure the power consumption at the input power of each IT equipment and add them. However, given the large number of IT equipment, this method will greatly increase the measurement workload and cost. Therefore, in actual operation, we generally perform measurements at the UPS output or column headgear power distribution input, and use the sum of the measured values ​​as the IT equipment power consumption index. which is:

PUE = (PM1+PM2-PM4)/PM3

In actual measurement, due to the limitations of measurement positions, measuring instruments, and shared power, sometimes the power consumption may not be directly measured or the measurement value is inaccurate. Therefore, indirect measurement and estimation must be performed through a certain method; there are documents based on IT work. The location of measurement points consumed is different, and the PUE measurement level is accurate to levels 1, 2, and 3. Considering that this discrimination has little effect on the PUE value, it is not necessary to measure the PUE at a different level.

For energy consumption of refrigeration equipment, data centers using water-cooled air conditioners usually share chillers with the office of the building where they are located. To measure the power consumed by data center cooling, the heat load between the data center and other loads can be measured or estimated. The proportion (according to the water flow rate, water temperature setting, etc.) is then allocated to the data center according to the proportion of the chiller power consumption. When calculating the pPUE (Local PUE, see Glossary) area and other areas have a shared cooling system, this method can also be used for indirect measurement and estimation.

For a power supply and distribution system, in the process of measuring the energy consumption of a power supply and distribution system, if it is difficult to install test equipment at a specified measurement point, indirect calculations can be made based on the energy efficiency factors of the relevant equipment. For example, in the PUE measurement, if the total energy consumption of the data center can not be measured directly before the data center transformer, it can be calculated based on the measured value after the transformer.

In order to prevent the calculation error of the evaluation index from being excessive or even to calculate errors, verification can be performed based on the relationship among these indicators. For example, according to PUE≈CLF+PLF+1, we can roughly verify the accuracy of these three indicators.

The value of the energy consumption index is affected by various factors and will change with changes in the season, holidays, and daily busy hours. In order to comprehensively and accurately understand the energy efficiency of the data center, energy consumption (power consumption) in the data center needs to be performed. Continuous, long-term measurements and records are used to calculate PUE by month, quarter and year.

Third, the total control center system

The master control center system includes two important modules: the alarm module and the large-screen control module.

Alarm module

The alarm module can notify users in the form of short messages, telephone calls, emails, sound and light, etc. in the event of an alarm in the system or the monitoring object, so that the fault can be resolved quickly. In the general monitoring and management system, centralized alarms are unified. Therefore, the alarm module generally provides open access interfaces such as SOCKET, Webservice, and the like, so that other sub-modules in the monitoring and management system call the alarm service. The alarm module's alarm information output method often provides (including and is not limited to) SMS, telephone, email, sound and light, etc. It can also interface with the enterprise's SMS gateway and publish alarm information through a unified information platform.

The alarm module serves as a terminal for information exchange, and the accuracy of its interaction information is very important. If there are too many alarm messages sent through the alarm module, the really important information will be overwhelmed, resulting in major accidents. Therefore, the information input to the alarm module must be filtered through validity. That is to say, the complex event analysis module must analyze and process the alarm information before it is issued. The validity of the complex event analysis module determines the effectiveness of the information interaction of the alarm module.

The alarm module is an important output carrier for alarming effective information. It is also crucial to ensure the target reachability of the information. During the running of the alarm module, program crashes, network failures, machine downtime, etc. all lead to the loss of alarm information at any time and delay the effective timing of the fault handling. Therefore, the alarm module should have a fault tolerance mechanism, including retransmission, breakpoint recovery resume, and so on. According to the requirements of data center hierarchy construction, the alarm module also needs corresponding redundant design. At the same time, due to the unreliability of the alarm mode, if the phone may not be connected, the mailbox server may malfunction, etc. In order to guarantee the delivery of information, the alarm upgrading function is generally required to be designed in the alarm module, such as according to the service level. High-level events and events that have not been handled during the timeout should be upgraded with warnings of various conditions. Upgrade processing, including the upgrade process of the alarm object, such as the person on duty A who has not dialed the phone, upgrades to the supervisor of the on-duty personnel A after a failed retry; also includes the upgrade of the alarm mode, and the upgrade from the sound and light alarm at the site of the master control center to SMS, telephone alarm. The complex situation also includes a combination of two upgrade methods.

2, large screen control module

The master control center is the place where the large and medium-sized data center operation and maintenance team conducts operational monitoring. The operation and maintenance staff mainly rely on the information displayed on the large-screen of the monitoring and management system to understand, understand and analyze the huge and complex systems and equipment. Operating conditions. Due to the complexity of monitoring management objects, in many cases, on-duty personnel need to understand and analyze the operation status of the data center from different dimensions at the same time. This requires multiple display screens to display the running status from different dimensions. Obviously, the richer and more clear and clear information related to monitoring and failures displayed on a large screen from multiple dimensions, will help operators to find and solve problems in a timely manner. Therefore, the data center monitoring and management center (or ECC) is equipped with a large screen display system composed of a plurality of screens.

The application of the large screen display module in the data center generally has two methods: one is to use a professional intelligent screen control system, and the other is to use a simple LCD screen combination system.

(1) Intelligent Screen Control System

The intelligent screen control system, also called a multi-screen splicing processor, is a core functional unit of a large-screen display system. The large-screen video wall system generally includes a screen control software and a video wall processor, so as to complete the screen display functions such as split screen and screen display of a large screen.

The intelligent screen control system adopts a pure hardware architecture of an ultra-large-scale FPGA, adopts a high-bandwidth network switching technology as a means, and based on a pixel-based image scaling engine, adopts a distributed modular design to realize a large-screen mosaic wall that is flexible and convenient. High-performance display control.

Intelligent screen control system supports plug and play, massive signal management, supports DVI, VGA, HDMI, Video and other signal source access, supports multi-display wall, multi-screen signal sharing, multi-screen linkage, support free splicing, separate window splicing , Any signal drag and drop, support image zooming, multi-screen, roaming, superimposition, large-resolution basemap, large-screen display of ultra-high resolution dynamic image, large-screen echo recording, digital signage screen, support multiple groups Display program presets, support for multiple users, and flexible control.

For the large-screen display system of the master control center, only the large-screen control function is not enough. In order to make the contents of each screen display relevant, complementary, and interactive, the display page of the monitoring and management software itself also needs to support multi-window display. Display linkage control.

The intelligent screen control system is generally used in the central control center of large and medium-sized data centers.

(2) Simple multi-screen splicing system

For the monitoring room of small and medium-sized data centers, the principle of economical and practical use does not necessarily require an intelligent screen control system. At this point, a small videowall system can be completed with a multi-screen output graphics card and several displays. Due to the limited output terminals of multi-screen video cards, the windows supported by this solution are generally limited. When using the Windows management LCD display, a plurality of liquid crystal displays can be mapped into a virtual large liquid crystal display, and the display splitting and layout of the business view can be completed through the split screen display module; multiple liquid crystal displays can also be mapped into independent display units. Each LCD displays an independent business view. At this time, just like the large-screen videowall system, only the split-screen display software module can provide corresponding multiple service view windows.

(3) Application of Large Screen Control Module

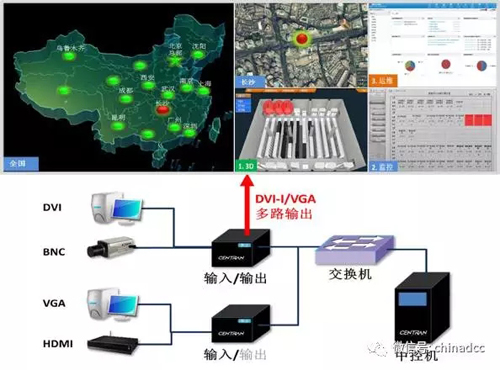

Figure 4 Large screen display system application diagram

The large-screen display module can configure display combinations of different application scenarios according to business needs.

Monitoring information display

For example, for a global monitoring view, such as a nationwide networked data center monitoring status monitoring view, a main screen can be designed and displayed using a combination of four display units; other monitoring management sub-service systems are displayed using a display unit and are designed as slaves. Screen. This enables real-time monitoring of the entire system from the global to the local. Each monitoring and management sub-business system can also design a page polling policy to display each key monitoring indicator in turn. Once an alarm occurs on a monitoring screen, it stays on the screen and prompts the current alarm information.

At the same time, monitoring and management system's main screen and from the screen, from the screen and from the screen can also set linkage, such as the operation of a business subsystem in the main screen, the business subsystem display unit will switch to The business subsystem indicator monitoring screen. It is also possible to use the preset function of the screen control module to set a variety of monitor display templates for the user to invoke flexibly according to the usage scenario.

Alarm information display and analysis

When a fault needs to be analyzed and consulted, a physical 3D screen can be used to display the physical location information of the device (if necessary, a video screen is used to display its video information to realize the virtual and reality display), and one screen displays its 2D. Logical relationship information (such as topology relationships), a screen showing its fault details, a screen showing relevant knowledge base information or emergency plan information, etc. By correlating this type of fault information, it helps to quickly analyze the root cause of faults, and helps organizations to accurately handle operational and maintenance forces and improve data center availability.

Management Information Display and Analysis

When it is required to compare the energy consumption of each engine room module horizontally, the PUE, CLF, and PLF of each engine room unit can be displayed on different screens. When it is necessary to globally understand the SPC capacity of all the engine rooms, the SPC of each engine room can be different. It is displayed on the screen; but when running analysis is required, monthly, quarterly, annual operating conditions, year-on-year, ring-to-grace, and summary can be displayed on different screens. These common display scenarios can be fixed with preset functions to be called when needed, which will help improve work efficiency.

Fourth, the basic service module

1, the database module

The database module is mainly divided into three types of database modules according to different stored service data and implementation technologies: a real-time database module, a history database module, and a configuration management database module.

(1) Real-time database module

According to different real-time business requirements for data, the monitoring and management system will separate business data into two different types of databases, one is a real-time database and the other is a historical database.

Realtime Database (RTDB) is a branch of database system development and is produced by combining database technology with real-time processing technology. The real-time database is dedicated to processing time-stamped data. It is characterized by fast frequency, large amount of concurrent data, and close correlation between data and time. Real-time data collection produces large concurrency and continuous data flow. Traditional databases are not suitable for streaming data processing and require careful consideration of data storage strategies. The real-time database serves as a cache facility for high-speed data access in the surveillance system and provides services such as real-time point-to-point access and real-time event access.

The biggest feature of real-time databases is timeliness. Real-time database to ensure that the sampled data can be updated to the real-time database, so the real-time database access delay time should not exceed the sampling frequency. At the same time, the real-time database also ensures that fresh data in the real-time database can be timely obtained by the data user through some specific mechanisms.

Another feature of real-time databases is the diversity of storage information. Due to the high speed of real-time database data processing, more and more applications that require higher performance have begun to use the real-time database as their own application cache to speed up processing.

With the ever-increasing scale of data center construction, the scale of real-time data required for management is also increasing. Therefore, the processing performance and load capacity of real-time database modules are also increasing.

(2) Historical database module

The real-time database module provides data sources for real-time data calculation, and the historical database module provides data sources for later data analysis, statistics, and mining.

Historical database is an intermediate database that supports online transaction processing and data mining. It is responsible for dumping the real-time data stream in the real-time database to an intermediate database for later analysis and processing. The historical database should have good data fault tolerance to facilitate data backup and recovery; it should also have a good data access interface to facilitate data analysis.

Due to the development and change of business, historical database modules first need to address the adaptability of business changes. Therefore, the history database generally supports the description of business rules. Through pre-defined business rules, the original data is extracted and converted to obtain the desired business data. Changes in the business only need to adjust the corresponding business rule descriptions to quickly adapt to new services.

Another challenge encountered by historical databases is the storage and retrieval of large amounts of data. A super-large data center has hundreds of thousands of monitoring and measuring points. If no processing is performed, the data of these measurement points must be stored. The amount of data is increased by GB per day. Therefore, the pre-storage data compression processing and the rational design of the database are crucial to the storage and retrieval performance of big data.

(3) Configuration Management Database Module

The configuration management database (CMDB) is not a relational database, nor is it a corporate asset repository. The configuration management database stores all software and hardware (not just computer hardware and software). These components are called configuration items (CIs). The configuration management database stores the relationship between configuration items and configuration items. The configuration management database is the core of monitoring management system business service management strategy and is the only source of configuration information. It guarantees the uniqueness and accuracy of information.

The configuration management database module is the soul of the monitoring management system. The construction of this model determines the management efficiency and effectiveness of the monitoring management system.

2, hot standby module

According to GB 50174-2008 requirements for the room availability level, the corresponding high-level data center monitoring and management system should match the redundant design. The dual-system hot backup module is an important common module for implementing the redundant design of the monitoring management system in the basic service system of the monitoring management system.

(1) Classification and Definition of Hot Standby

Hot standby uses two servers to back up each other and perform the same service. When a server fails, it can be served by another server, which automatically guarantees that the system can continue to provide services without manual intervention. Dual-system hot spare is solved by the standby server to solve the problem of uninterrupted service when the main server fails.

Divided from the working mode, there are two types of hot standby: active/standby and active/active.

Active/standby is also called active/standby mode. When the host fails, the standby server takes over the host's services in time. Active/Standby mode always has only one server in the active working state, and the other service is waiting for the non-active state.

In active/active mode, the active and standby devices work at the same time and provide the same external services. The client can access any of the machines to complete the required services. This can not only achieve simple load balancing, but also minimize the time for switching faults.

(2) The choice of hot standby

Selecting the working mode of the hot standby module mainly depends on the operating characteristics of the application service running on the hot standby module. If application services allow running jobs at the same time, active/active is a good choice. If the application service allows only one instance to run at the same time, only the active/standby mode can be selected.

Mueller Heat Exchangers Gaskets,Mueller Gasket Heat Exchanger Spare Parts,Mueller Heat Exchangers,Phe Gasket For Mueller

Dongguan Runfengda F&M Co., Ltd , https://www.runfengd.com