Since 2012, Deep Learning has broken through a classic artificial intelligence problem with a tendency to break through. In the face of the rapid development of artificial intelligence, you do not want to understand its basic working principle?

To figure out what deep learning is, start with artificial intelligence. Since computer science confirmed the term artificial intelligence at the Dartmouth Conferences in 1956, there is no shortage of artificial intelligence. We dream of a magical machine with five senses of humans (and even more), reasoning power, and human thinking. Nowadays, although the dream situation has not yet appeared, the slightly weaker artificial intelligence has become popular, such as image recognition, speech recognition, and multi-language translation.

Machine learning is an important way to implement artificial intelligence. The concept of machine learning comes from early artificial intelligence researchers. In simple terms, machine learning uses algorithms to analyze data, learn from it and automatically summarize it into a model, and finally use the model to make inferences or predictions. Unlike traditional programming language development software, we use a lot of data for machine learning, a process called "training."

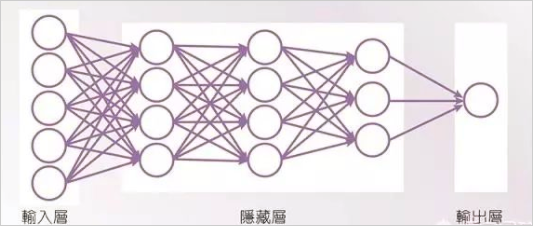

Deep Learning is a highly regarded machine learning in recent years. Deep learning is rooted in a neural network-like model, but today's deep learning technology is completely different from its predecessor. The strongest speech recognition and imagery at present. The identification system is completed with deep learning technology. The AI ​​camera function promoted by various manufacturers, and the AlphaGo, which has been popular all over the street, are based on deep learning technology, and only the application scenarios are different.

The foundation of deep learning is big data, and the path to implementation is cloud computing. As long as there is sufficient data, fast enough calculations, and the resulting "results" (a macroscopic representation of some intelligent function of the machine), it will be more accurate. At present, intelligent operation paths based on big data and cloud computing can be better explained in the framework of deep neural networks.

Deep neural networks, also known as deep learning, are an important branch of artificial intelligence. Deep neural networks are currently the basis for many modern AI applications. Since deep neural networks have demonstrated breakthrough results in speech and image recognition tasks, the number of applications using deep neural networks has exploded.

At present, these deep neural network methods are widely used in the fields of automatic driving, speech recognition, image recognition, AI games and the like. In many areas, deep neural networks differ from early experts in manually extracting features or making rules. The superior performance of deep neural networks comes from the ability to use statistical learning methods on large amounts of data to extract advanced features from raw data, thus inputting space. Make a valid representation.

In fact, this process of representation involves the process of computing large amounts of data, because the ultimate high accuracy for a particular function is at the expense of super-high computational complexity.

And the computing engine we usually call, especially the GPU, is the foundation of deep neural networks. Therefore, the method of improving the energy efficiency and throughput of deep neural networks without sacrificing accuracy and increasing hardware cost is crucial for the wider application of deep neural networks in AI systems.

At present, researchers of some well-known large domestic companies have focused more on the development of dedicated acceleration methods for deep neural computing, and have begun to develop artificial intelligence chips, which are real artificial intelligence chips.

The so-called artificial intelligence chip is generally an ASIC (dedicated chip) designed for artificial intelligence algorithms. Although traditional CPUs and GPUs can also be used to execute artificial intelligence algorithms, these chips are either slow in computation or high in power consumption. These shortcomings make them unusable in many situations.

For example, an autonomous car needs an artificial intelligence chip, because the car needs to recognize the changes of road pedestrians and traffic lights during driving. These situations are sometimes sudden. If we use the traditional CPU to do this sudden road condition calculation, Because the CPU is not a full-time dry artificial intelligence calculation, its calculation speed is slow, it is likely that the green light has turned red, and our self-driving car has not braked.

If you switch to GPU, the calculation speed is really much faster, but the calculation power consumption is very large at this time. The car battery of electric car can't support this function for a long time, and the high-power chip will cause the car body to heat up, which will easily lead to self-ignition of the fuel tank. Moreover, GPUs are generally expensive, and ordinary consumers rarely can afford such autonomous vehicles that use a large number of GPU chips. Therefore, in the field of artificial intelligence, the development of dedicated chips has become an inevitable trend.

Industry-specific chip technology implementation path for different scenarios

The artificial intelligence chips currently available on the market can be divided into two categories according to the processing tasks.

-- For training and inference, the working GPU can be dry, the CPU can be dry, and the FPGA can do it. But if you develop artificial intelligence chips, you can do better. Because the artificial intelligence chip is professional, it is equivalent to an "expert."

- Infer the acceleration chip. This kind of chip is to put the neural network trained model on the chip. For example, the Cambrian neural network chip, the DPU of Shenjian Technology, and the BPU of the horizon robot are all such products.

If divided by usage scenarios, artificial intelligence chips are mainly divided into cloud and terminal chips.

At present, the mainstream deep learning artificial neural network algorithm includes two aspects of training and inference. Since training requires a large amount of data to train artificial neural networks, training is mainly carried out in the cloud. For example, BAIDU launched the Kunlun chip at the AI ​​Developer Conference in 2018 - this is China's first cloud full-featured AI chip. The terminal chip is more focused on low cost and low power consumption. Currently, China's artificial intelligence chip start-ups are mainly deployed in this field.

So how does the artificial intelligence chip work? In the field of neural networks, a subfield is called deep learning. The initial neural network usually had only a few layers of network. Deep networks usually have more layers, and today's networks are generally more than five layers, even reaching more than a thousand layers.

The current interpretation of the use of deep neural networks in vision applications is that after all pixels of an image are input to the first layer of the network, the weighted sum of the layers can be interpreted as representing lower order features of the image. As the number of layers deepens, these features are combined to represent higher order image features.

Of course, an AI chip that integrates more than 5.5 billion transistors in the size of the fingernail is not as simple as taking a picture. At present, there are already intelligent applications such as voice service, machine vision recognition, and image processing. In the future, more diverse application types including medical, AR, and game AI will be added.

So how do voice, service, and image auto-processing functions work at the micro level?

Take a picture of a cat in a chaotic background with AI. When the picture in the camera is on the surface, the weight of the layer may be “identified†as a tiger, but as the number of layers increases, The results will become more and more accurate, not only to identify a cat in the picture, but also to further identify the cat's surroundings: a meadow, the sky is blue, the cat is standing on the steps, etc. Image features.

The deep learning network has been a huge success in recent years, mainly due to three factors.

The first is the massive amount of information needed to train the network. Learning a valid representation requires a lot of training data. At present, Facebook receives more than 350 million images every day, Wal-Mart generates 2.5Pb of user data every hour, and YouTube has 300 hours of video uploaded every minute. Therefore, cloud service providers and many companies have massive amounts of data to train algorithms.

Second is sufficient computing resources. Advances in semiconductor and computer architectures provide sufficient computing power to make it possible to train algorithms in a reasonable amount of time.

Finally, the evolution of algorithmic techniques has greatly improved accuracy and broadened the scope of application of DNN. Early DNN applications opened the door to algorithm development. It has inspired the development of many deep learning frameworks (most of which are open source), which makes it easy for many researchers and practitioners to use DNN networks.

Currently, DNN has been widely used in various fields, including image and video, voice and language, medicine, games, robotics, and autopilot. It is foreseeable that deep neural networks will also have more in-depth applications in finance (such as trading, energy forecasting and risk assessment), infrastructure construction (such as structural safety and traffic control), weather forecasting and event detection. .

Three Head Industrial Embroidery Machine,Small Industrial Embroidery Machine,Industrial Hat Sewing Machine,Emel Embroidery Machine

Ningbo World Wide Electric techonology CO.,Ltd , https://www.nbmy-sewmachine.com